Suche

Beiträge, die mit AI getaggt sind

PDF #Zine

„We’re excited to announce a new zine version of Good Night, Tech Right: Pull the Plug on AI Fascism, a deep-dive into the influence of far-Right and neo-fascist politics on the emerging bloc of tech billionaires within the Trumpian state, and its push to cement Artificial Intelligence (AI) as a new regime of capital accumulation.

With anger only rising against techno-fascists like #ElonMusk and calls for protests against #Tesla growing, we hope this zine offers people a resource and chance to start discussions around our shifting landscape.“

https://itsgoingdown.org/good-night-tech-right-pdf-zine/ via @igd_news

#Technology #Fascism #Musk #FckNzs #AI #KI #SurveillanceCapitalism #Capitalism #Protest #Sabotage #politics

“Good Night, Tech Right” PDF Zine

We're excited to announce a new zine version of Good Night, Tech Right: Pull the Plug on AI Fascism, a deep-dive into the influence of far-Right and neo-fascist politics on the emerging bloc of...It's Going Down

„The Tech-Right is united, but less by their scattered reactionary ideologies, and more by their shared class interests. They want us divided, thinking we’ll fight each other over the scraps they offer us, or by generating the latest outrage on social media platforms they control.

We need to organize and build around our shared class interests, reaching across divisions, around common goals and struggles. We want homes for everyone. We want a livable planet for our children. We want control over our labor. We want to abolish the systems that are destroying us.

In the 1990s, anarchists, labor unions, anti-sweatshop activists, and environmental groups helped mobilize thousands in militant protests against corporate globalization, all under a Democratic president. Using decentralized networks, independent media, and affinity groups, they helped to create a growing movement, rooted in anti-capitalist analysis and direct action. We did it before, we can do it again.“

The oligarchs want a king. Let’s give them a peasants’ revolt instead.“

Via @igd_news

https://itsgoingdown.org/good-night-tech-right-pull-the-plug-on-ai-fascism/

#Technology #Data #SurveillanceCapitalism #Surveillance #Musk #Zuckerberg #Trump #Fascism #Protest #AI #KI

Good Night, Tech-Right: Pull the Plug on AI Fascism

On January 20th, at a ceremony attended by both far-Right and neo-fascist leaders from around the globe and some of the richest tech billionaires in the world, including the heads of Apple, Google,...It's Going Down

So, geht es wieder? Luft wieder da? Dann komme gerne lesen.

#blog #blogging #blogger #ki #ai

https://www.jansens-pott.de/ki-ist-fuer-mich-der-moderne-karl-klammer-geworden/?mtm_campaign=mastodon

KI ist für mich der moderne Karl Klammer geworden.

Kennt Ihr noch Karl Klammer, einen digitalen Assistenten? Damit nahm das Drama doch seinen Lauf, oder nicht? Heute ist die KI der Mr. Klammer.Herr Tommi (Jansens Pott)

OpenAI's viral Studio Ghibli moment highlights AI copyright concerns | TechCrunch

ChatGPT's new AI image generator is being used to create memes in the style of Studio Ghibli, reigniting copyright concerns.Maxwell Zeff (TechCrunch)

Wer glaubt eine Ermittlungsbehörde könnte der Versuchung widerstehen mit Daten Ermittlungserfolge zu maximieren, ist naive.

Hessen ist bereits dort angekommen und der Rest des Landes folgt. Stichwort NSA Skandal in den USA.

#Datnehunger #AI #MenschlicheNatur #Ehrgeiz #BestIntentions #RoadToHell

#Amazon is just another store.

#Turkey is just another holiday destination.

A #Cybertruck is just another mode of transport.

#Nestle is just another coffee brand.

Factory farmed #chicken is just another source of protein.

#LLMs (#AI) are just another tool.

#Capitalism needs you to ignore the social and environmental costs, and think only of personal benefits.

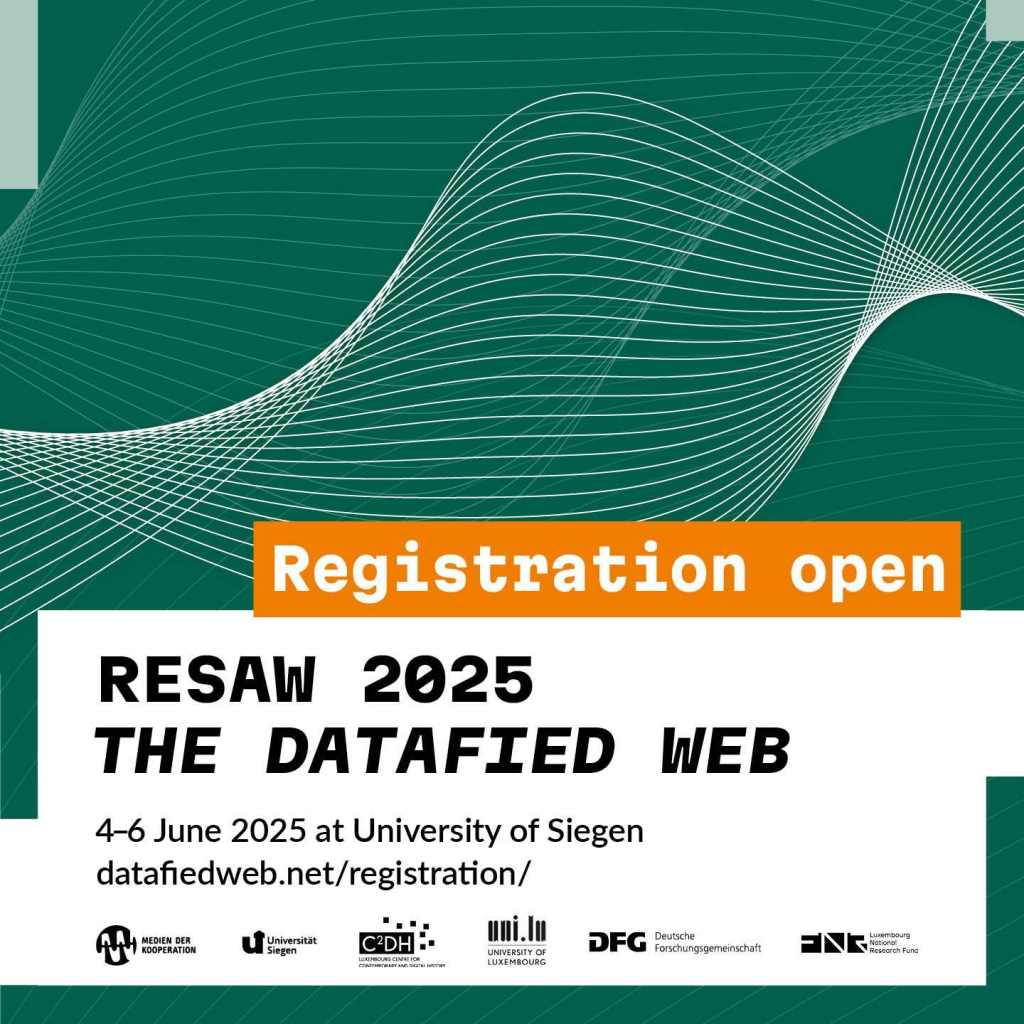

Really looking forward to everything, especially the keynotes by @nthylstrup on "Vanishing Points: Technographies of Data Loss" and @jwyg on "Public Data Cultures"!

#www #history #archive #data #AI #media

Ein gefährlicher Dammbruch: Polizei bekommt Zugriff auf Gesundheitsdaten

https://www.youtube.com/watch?v=chLrTcL2_Co

#Datenschutz #Polizeibehörden #BigBrother

- YouTube

Auf YouTube findest du die angesagtesten Videos und Tracks. Außerdem kannst du eigene Inhalte hochladen und mit Freunden oder gleich der ganzen Welt teilen.www.youtube.com

https://gomoot.com/gpt-4o-riceve-la-generazione-immagini-integrata-in-chatgpt

#ai #blog #gpt4o #ia #news #openai #picks #tech #tecnologia

GPT-4o riceve la generazione immagini integrata in ChatGPT

GPT-4o cambia il generatore di immagini DALL-E con un generatore di immagini nativo in grado di creare immagini dettagliate e coerenti con interazioni in chat.Graziano (Gomoot : tecnologia e lifestyle)

Weizenbaum-Forscher Sebastian Koth erklärt im Interview, dass in Zeiten von KI-Nationalismus, Tech-Oligarchien und geopolitischem Wettrennen wahre Innovation in dezentralen gemeinwohlorientierten KI-Infrastrukturen liegt. Die Wissenschaft macht es bereits vor.

Zum Interview 👉 https://www.weizenbaum-institut.de/news/detail/ki-innovation-beruht-auf-commons-nicht-big-tech/

#KünstlicheIntelligenz #KI #OpenScience #AI #OpenAI #Musk #Trump #DeepSeek #DOGE #PublicAI #GlobalAIRace

This is a test of federation. But I will be using it the way it was intended, for discussion and respectful disagreements between people. In this case I will be trying to counter argument the statement in this post by Madiator, while agreeing with some of it.

Read: https://blenderdumbass.org/articles/using_ai_for_art_is_to_disrespect_your_audience___testing_federation_with_madiator_s_blog__

#madiator #fediverse #federation #test #blog #webdev #AI

“Breaking: Met Police installing permanent live facial recognition cameras”

by Skwawkbox @skwawkbox

@UKLabour

“Force slammed for racism, misogyny and homophobia, which serves police state and is stitching up anti-genocide protesters will soon be able to spy on you across the capital. What could go wrong?”

https://skwawkbox.org/2025/03/24/breaking-met-police-installing-permanent-live-facial-recognition-cameras/

#Press #UK #Police #London #MetPolice #Facial #Recognition #Surveillance #AI #Racism #PoliceState #Fascism

Breaking: Met Police installing permanent live facial recognition cameras

Force slammed for racism, misogyny and homophobia, which serves police state and is stitching up anti-genocide protesters will soon be able to spy on you across the capital. What could go wrong? Th…SKWAWKBOX

=> "Einen noch krasseren Privatsphärenangriff erleben Nutzer*innen der WhatsApp-Beta-Version 2.25.7.16. Bei ihnen ist die Funktion „Voice First“ inbegriffen. Dabei beginnt der KI-Assistent, sobald er geöffnet wird, die Nutzer*innen abzuhören. Er tut dies so lange, bis die Nutzenden das Tool verlassen, das Mikro stummschalten oder zu Tastatureingaben wechseln."

https://netzpolitik.org/2025/angriff-auf-privatsphaere-meta-messenger-fuehren-ki-assistenten-in-europa-ein/

@netzpolitik_feed

#whatsapp #meta #ki #voicefirst #ai #wtf #unplugtrump

Angriff auf Privatsphäre: Meta-Messenger führen KI-Assistenten in Europa ein

Künftig können Nutzer*innen von WhatsApp und Co. mit einer KI chatten und auch sprechen. Dabei werden ihre Daten mit Meta geteilt. Und ein Test-Feature lässt die KI sogar durchgängig mithören.netzpolitik.org

https://www.youtube.com/watch?v=6lIkJpfgKr4

#socialContract #TechnoOligarchy #AI #TheExpanse #Injustice #Politics #HumanDignity #SelfPreservasion #TechnoFascism #Congo #ChildLabor #Exploitation #WageSlavery #ChildLabour #Fainess #Devency

- YouTube

Auf YouTube findest du die angesagtesten Videos und Tracks. Außerdem kannst du eigene Inhalte hochladen und mit Freunden oder gleich der ganzen Welt teilen.www.youtube.com

The monitoring tools often helped counselors reach out to students who might have otherwise struggled in silence. But the Vancouver case is a stark reminder of surveillance technology’s unintended consequences in American schools.

In some cases, the technology has outed LGBTQ+ children and eroded trust between students and school staff, while failing to keep schools completely safe.

Gaggle Safety Management, the company that developed the software that tracks Vancouver schools students’ online activity, believes not monitoring children is like letting them loose on “a digital playground without fences or recess monitors,” CEO and founder Jeff Patterson said.

Roughly 1,500 school districts nationwide use Gaggle’s software to track the online activity of approximately 6 million students. It’s one of many companies, like GoGuardian and Securly, that promise to keep kids safe through AI-assisted web surveillance."

https://abcnews.go.com/Technology/wireStory/schools-ai-monitor-kids-hoping-prevent-violence-investigation-119705134

#USA #AI #Surveillance #Schools #PoliceState #Gaggle

Schools use AI to monitor kids, but investigation finds risks

Schools are turning to AI-powered surveillance technology to monitor students on school-issued devices like laptops and tabletsCLAIRE BRYAN OF THE SEATTLE TIMES Associated Press (ABC News)

https://rbfirehose.com/2025/03/24/live-tracker-genai-in-media-70-tools-and-products-from-around-the-world-in-english-and-in-finnish-behind-the-numbers/

#Python #Programming #Coding #IT #Tech #Software #computer #Statistics #DataAnalysis #MachineLearning #DataScience #Jokes #Memes #Funny #NeuralNetworks #AI #DevOps

💻

Python Knowledge Base

https://python-code.pro/

Where curiosity meets code – explore our Python knowledge hub today!

Become a Python expert with our comprehensive knowledge base, covering everything from the basics to advanced topics, take your coding to the next level.Andrey BRATUS (python-code.pro)

»Open Source Infrastructure has an AI problem«

– from @niccolove / @niccolo_ve

📺 https://tube.kockatoo.org/w/woce36hTzF6JdnBiBEaRZJ

#opensource #peertube #video #ai #aiart #video #foss #floss #llm #LLMs #attack #freedom

Open Source Infrastructure has an AI problem

You can find all of these videos as written articles, plus some extra content, at https://thelibre.news You can the channel grow by donating to the following platforms: Paypal: https://paypal.me/ni...Kockatoo Tube

https://www.saverilawfirm.com/meta-language-model-litigation

#ai #meta #writing

Meta Language Model Litigation

The Joseph Saveri Law Firm, LLP filed a lawsuit on behalf of a class of plaintiffs seeking compensation for damages caused by defendant Meta.www.saverilawfirm.com

Building off of the work of the AI procurement primer by NYU, Platoniq and Civio facilitated a workshop to try to start asking how we might create more transparent processes for AI procurement and design, using frameworks for participation and governance such as Decidim.

Approaching procurement and design often takes place through a closed technical or legal process. The workshop aimed to start a conversation and begin developing ideas on how to open up public AI governance and support safety, transparency, and public participation for the services and tools that affect regular peoples’ everyday lives."

https://journal.platoniq.net/en/wilder-journal-2/futures/participatory-procurement-design-ai/

#AI #ParticipatoryProcurement #AIDesign #AIGovernance

Participatory Procurement and Design of AI

In the past decade, we have seen an increase in the initiatives related to the growth and development of AI, and so has government design and acquisition of AI systems.Nadia Nadesan

"'I need lethal machine learning models, not equitable machine learning models,' Hegseth said."

You f-ing noob, lethality without clarity on what you're killing is worse than useless, you tool.

https://thehill.com/policy/defense/5206516-pentagon-cancels-wasteful-spending/

#USpol #hegseth #defense #defence #AutonomousWeapons #ai

I just read it while working on the Wikipedia article about shadow libraries, and it’s a fascinating history. https://en.wikipedia.org/wiki/Shadow_library

I fear the already fraught conversations about shadow libraries will take a turn for the worse now that it’s overlapping with the incredibly fraught conversations about AI training.

#AI #LibGen #OpenAccess

"LLM Scraper zerstören die Infrastruktur von FOSS-Projekten, und es wird immer schlimmer."

https://thelibre.news/foss-infrastructure-is-under-attack-by-ai-companies/

#OpenSource #FOSS #LLM #KI #AI

FOSS infrastructure is under attack by AI companies

LLM scrapers are taking down FOSS projects' infrastructure, and it's getting worse.Niccolò Venerandi (LibreNews)

「 The Spanish bill, which needs to be approved by the lower house, classifies non-compliance with proper labelling of AI-generated content as a "serious offence" that can lead to fines of up to 35 million euros ($38.2 million) or 7% of their global annual turnover 」

https://www.reuters.com/technology/artificial-intelligence/spain-impose-massive-fines-not-labelling-ai-generated-content-2025-03-11/

#spain #ai #deepfakes #disinformation

https://www.maximizemarketresearch.com/market-report/india-smart-pole-market/33363/

#SmartPoles #IndiaTech #Tesla #Hamas #Gaza #Survivor2025 #Divorce#UrbanInnovation #IoT #SmartCities #DigitalIndia #5G #SustainableDevelopment #AI #SmartInfrastructure

https://www.maximizemarketresearch.com/market-report/global-smart-speaker-market/2355/

#SmartSpeakers #VoiceAssistants #AI #IoT #HomeAutomation #TechTrends #Tesla #Hamas #Gaza #Survivor2025 #Divorce #MarketGrowth #SmartHome #Innovation

Smart Speaker Market - Industry Analysis and Forecast 2030

Smart Speaker Market was valued at USD 11.39 Billion in 2023, and it is expected to reach USD 34.40 Billion by 2030.Maximize Market Research Pvt Ltd

#AI #AISucks #AIOptOut

Boston Dynamics presents the progress made in the movement capacity of its "humanoid robot" Atlas.

Scary, scary, scary...

#AureFreePress #News #press #headline #socialmedia #Fediverse #robots #ai

On the FTC’s website, the page hosting all of the agency’s business-related blogs and guidance no longer includes any information published during former president Joe Biden’s administration, current and former FTC employees, who spoke under anonymity for fear of retaliation, tell WIRED. These blogs contained advice from the FTC on how big tech companies could avoid violating consumer protection laws."

https://www.wired.com/story/federal-trade-commission-removed-blogs-critical-of-ai-amazon-microsoft/

#USA #Trump #FTC #Antitrust #ConsumerProtection #Privacy #BigTech #AI

![Screenshot of Twili Recipes app:

"LLM service: [ ] Perplexety, [ ] Anthropic, [ ] OpenAI

⚠️ Please provide token" Screenshot of Twili Recipes app:

"LLM service: [ ] Perplexety, [ ] Anthropic, [ ] OpenAI

⚠️ Please provide token"](https://friendica-leipzig.de/photo/preview/1024/822269)